CodeSignal has some very unique QA challenges. For one thing, to make sure we’re always agilely responding to our customers’ needs, we usually test and launch a new release every single week (compared to the standard 2-4 weeks for software).

Furthermore, CodeSignal’s platform has many user personas, with various levels of permissions and types of actions that they will perform. And we support a huge range of functionality, from creating coding tasks of different types (database, frontend, mobile, etc.), to making and playing back screen recordings, to reporting on candidate performance. Finally, our IDE has to run code in over 40 languages and frameworks without any issues.

We remember the early days of trying everything but the kitchen sink to test our product. As we’ve grown, we’ve been putting more practices and processes in place, while retaining the close relationship between QA, product, and engineering. This article is for anyone who’s ever wondered what QA looks like at a fast-growing startup like CodeSignal. We’ll also include some tips we’ve picked up along the way, like our favorite unit testing framework for JavaScript.

The different types of software testing

The main goal of every QA team is to have a bug-free product. To achieve this (or get as close as possible!), we test the product in several ways. Each method serves a different purpose and is an essential piece of the QA process.

- Engineers write unit tests for isolated functions.

- Engineers write integration tests to test how small components behave when they’re stitched together.

- The QA team performs end-to-end testing on each release to ensure the whole system’s behavior meets the requirements and there are no regressions.

- The QA team conducts exploratory testing for new features to identify bugs and edge cases that are outside of the “happy path” of user behavior.

Unit testing

Unit tests validate the behavior of a standalone piece of business logic. For example, we might have a function that calculates the score for a user’s test based on how many test cases they’ve passed. We can isolate this function and test it by providing an input and asserting our expectations about what the output will be. Developers are responsible for writing unit tests for their code, and we’re constantly adding more while we work on new features and bug fixes.

Most of our platform is written in JavaScript. (As an aside, because we perform static typing with Flow and TypeScript, we’re able to eliminate a lot of the typical JS testing around what happens if a function receives an unexpected type.) We use a unit testing framework called Jest that’s developed by Facebook and resembles Jasmine or Mocha. We like that the syntax is very easy to read through and understand at a high level, since it’s designed to describe the system’s desired behavior. Here’s an example of a unit test file for a Queue data structure:

// @flow

import Queue from './Queue';

let queue: Queue<number>;

beforeEach(() => {

queue = new Queue();

});

describe('Queue', () => {

describe('size', () => {

it('returns the number of elements in the queue', () => {

queue.add(0);

queue.add(1);

queue.remove();

expect(queue.size()).toBe(1);

});

});

describe('add', () => {

it('adds an item to the back of the queue', () => {

queue.add(0);

queue.add(1);

expect(queue.peek()).toBe(0);

queue.remove();

expect(queue.peek()).toBe(1);

});

});

describe('remove', () => {

it('removes an item from the front of the queue', () => {

queue.add(0);

queue.add(1);

queue.remove();

expect(queue.peek()).toBe(1);

expect(queue.size()).toBe(1);

});

it('throws an error when the queue is empty', () => {

expect(() => queue.remove()).toThrow();

});

});

...We’re big fans of test-driven development or TDD—there’s really no better way to ensure that you’re writing bug-free code. With TDD, engineers write unit tests before doing any implementation, specifying a function’s behavior and the edge cases it needs to handle ahead of time. Kind of like eating your vegetables, TDD is one of those ideas that’s great to strive for but doesn’t happen 100% of the time (and that’s ok).

Integration testing

With integration testing, engineers write tests that stitch together multiple components—say, a couple of services that are running together or a UI component with a few separate parts. Integration tests give you an idea of how the pieces of the system align. When things start failing here, it’s usually a sign that we need to test each component more rigorously in isolation.

We use Jest for many of our integration tests. Some of these tests can get quite complex. For example, when we deploy a new version of our coderunners, which is our back-end infrastructure for executing candidates’ code, we run a lengthy suite of tests to ensure stability. We support over 40 languages and frameworks, and it’s essential to make sure the latest update hasn’t introduced a regression somewhere. For example, we will simulate a request to run Fortran code (it’s an unlikely use case, but we have to make sure it works!) and send it to our coderunners to execute.

End-to-end testing

Aside from unit and integration testing, there’s the broader kind of testing that we perform for each release to make sure the entire product is behaving as expected. This includes everything from checking that the UI looks polished to ensuring that our internal logic for administering tests and interviews is working properly. For simplicity, we can refer to this type of testing as end-to-end testing. It includes regression testing, which verifies that no issues have been introduced into the system. And it also covers the functional or acceptance testing that we do for new features the team is working on, which validates the software against its requirements and specifications.

CodeSignal has a large number of user personas that we need to treat independently for testing. After all, the needs of a candidate are very different from those of a technical hiring manager. We have about 10 different personas, including Certify candidates, interview candidates, recruiting admins, technical interviewers, and more. We test end-to-end workflows for each persona to be sure that they don’t run into bugs. For example, we verify that someone can sign up and complete a Certified Assessment.

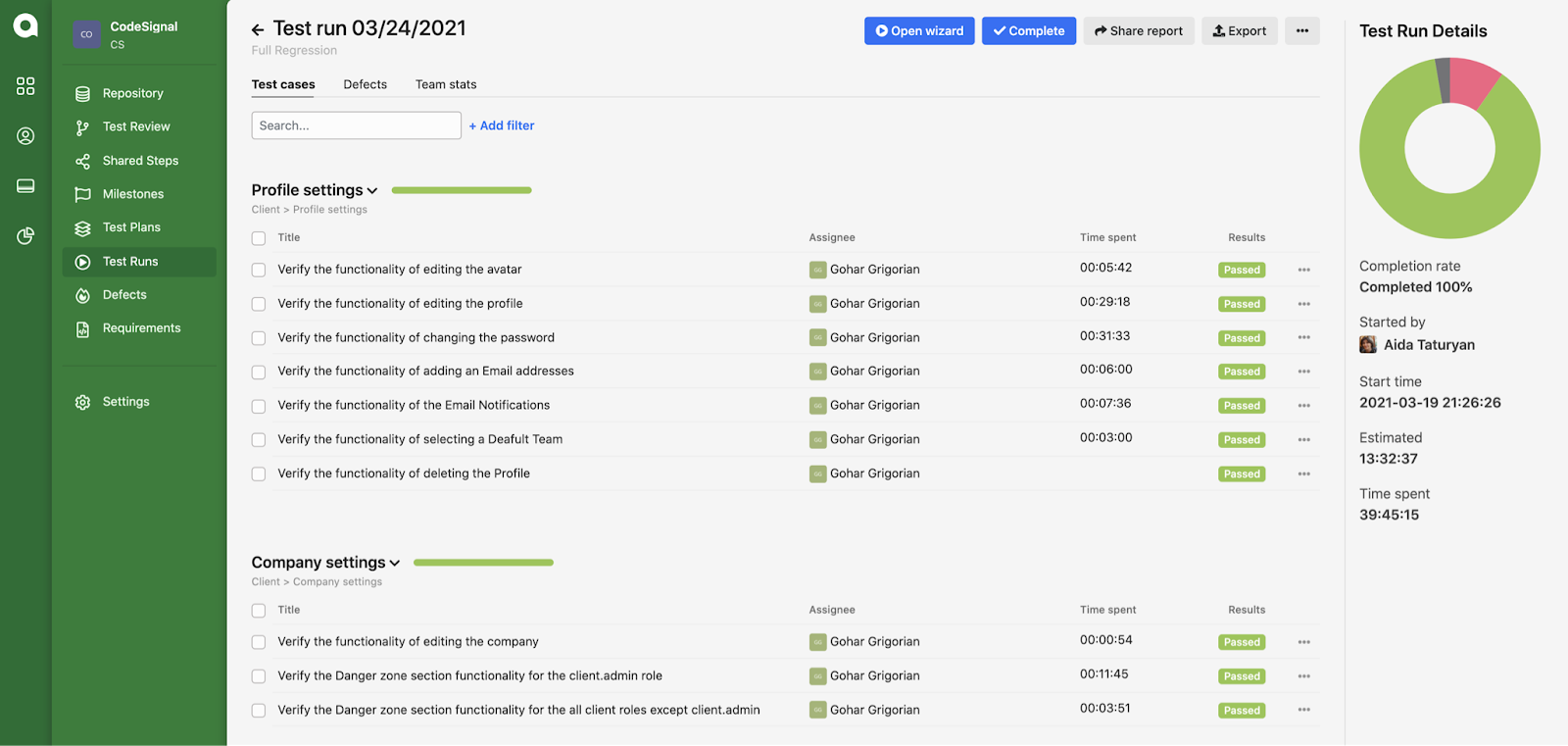

We perform a mix of automated and manual end-to-end testing and use Qase to manage our test cases. Qase helps us organize our daily work around a test plan, track what is tested and what needs to be tested, and notice where we’re finding issues in the product. Having all the test cases in one place also helps us prioritize the scenarios that we should try to automate in the future.

Automated testing

For automation, we use WebdriverIO, which is a Node JS wrapper around Selenium WebDriver. Selenium is written in Java, and we like WebdriverIO because we’ve found that there is a nice parity when you’re controlling browser tests using JavaScript. Interviews in particular are tricky to test. Fortunately, it is possible to create a multi-browser test with WebdriverIO. We can even launch two different browsers, log in as two different users and have them take turns performing different actions.

Here’s an example of a WebdriverIO test. This one simulates the process of inviting a few candidates to take a test:

// @flow

import { logIn } from './login';

const username = 'gimli';

const testId = 'cryptogold-test';

const CANDIDATE_FORM_SELECTOR = 'div[data-name="candidate-invite-form"]';

describe('inviting candidates to a test', function () {

beforeAll(function () {

logIn(username);

browser.url(`/client-dashboard/tests/${testId}`);

$('[data-name="invite-candidate"]').waitForExist();

});

it('opens the modal when client clicks the "invite a candidate" button', () => {

$('.button[data-name="invite-candidate"]').click();

$('.screen-invite-modal').waitForExist();

});

it('lets the client add candidate information', () => {

$(CANDIDATE_FORM_SELECTOR).waitForExist();

$('input[name="email"]').addValue('[email protected]');

$('input[name="firstName"]').addValue('Legolas');

$('input[name="lastName"]').addValue('Thranduilovich');

});

it('lets the client add another candidate form', () => {

$('.button[data-name="add-candidates"]').click();

browser.waitUntil(() => $$(CANDIDATE_FORM_SELECTOR).length === 2, {

timeoutMsg: 'Expected second candidate form to appear',

});

});

it('lets the client add second candidate information', () => {

const secondCandidateForm = $$(CANDIDATE_FORM_SELECTOR)[1];

secondCandidateForm.$('input[name="email"]').addValue('[email protected]');

secondCandidateForm.$('input[name="firstName"]').addValue('Aragorn');

secondCandidateForm.$('input[name="lastName"]').addValue('Arathornovich');

});

it('moves to the second slide when "create invitations" is clicked', () => {

$('.button[data-name="create-invites"]').click();

});

it('closes the modal when "send invites" is clicked', () => {

$('.button[data-name="send-invites"]').waitForExistAndClick();

$('.screen-invite-modal').waitForExist({ reverse: true });

});

it('shows newly added candidates in the pending table', () => {

$('div.tabs--title=Pending').click();

$('.tabs--tab.tab-pending.-active').waitForExist();

expect($('p=Legolas Thranduilovich').isExisting()).toBe(true);

expect($('p=Aragorn Arathornovich').isExisting()).toBe(true);

});

});

Exploratory testing

The QA team continuously performs manual exploratory testing, especially for new features. It would be impossible to capture this value with automated tests, because engineers tend to think about the “happy path” when writing code and even to some extent when writing tests. On the other hand, it’s the QA team’s job to think of every single edge case and thing that could go wrong. For example, what happens if we enter negative infinity in this number field? When we fix an issue through exploratory testing, we encode the behavior in an end-to-end test to guarantee that it won’t regress in the future.

Working with engineering & product

One of the benefits of being a small QA team is that we work closely with engineering and product and our input is highly valued. During exploratory and manual end-to-end testing, we develop a holistic view of how the product fits together. We’re able to share our perspectives and opinions about what could be improved, and ultimately we have an impact on the design and UX. With our deep understanding of the product, we’re also able to suggest more behaviors that should be validated.

We collaborate closely with engineering at all stages of development. It’s helpful that when we aren’t sure about a requirement, there isn’t a lot of back-and-forth—we can just ask the engineer directly and they support us in every aspect. We have an important seat at the table when it comes to prioritizing engineering work as well. The QA team participates in a weekly meeting where all existing issues are triaged and distributed among CodeSignal’s engineering teams.

Where we’re going next

A big focus for us this year is automating more of our manual end-to-end testing, especially as we continue to add new features. This will save our QA team a lot of time, and we’ll be free to focus even more on exploratory testing, which is where we can provide the most value. Interested in joining our QA team and bringing your automation expertise to the role? Check out our careers page for more information about how you can help us build the #1 tech interview tool.

Aida Taturyan is the QA Lead at CodeSignal. This is her 5th year here 🙂